Both Oracle time model and sampling model (ASH) are powerful Oracle performance tuning infrastructures. But combining sampling and time is dangerous. So, having the time_waited column in the ASH views presents us with both power and danger.

My previous post demonstrated the ASH time_waited column's unit of time and what all the zeros are about.

This post is about how to use the ASH time_waited values. But, it's not as simple as it seems. It's all centered around Oracle wait times being (hopefully and usually) much less than the ASH sample rate. So, how does an Oracle DBA appropriately and aggressively use the ASH time_waited column? That's what this post is all about.

Note: There is a lot of script detail in this post. If you just want "the answers" then scroll down to the final "Super Summary" section of this post.

Questions That Need Answering

A couple of important questions come to mind:

-

Does sampling sessions every second reflect the true wait time?

-

Does wait time skewing occur?

-

What does a bunch of ASH time_waited column values tell us about the true wait time average and other statistical data?

-

What should I watch out for to ensure I am using the data correctly?

-

How can I aggressively use the ASH time_waited column?

If these questions are important to you, then read on!

The Experiment: Setup Overview

Note: You can download all the experimental details (scripts, collection, reporting, spreadsheet, data) HERE.

To confidently answer the above questions, I needed to create an experiment. But it was really tricky to create because I must create and control sub-second wait times.

In my previous post, I had one session lock a table indefinitely and had another session attempt to acquire the lock but with a specific timeout. That allowed me to control the lock acquisition wait time.

However, the timeout parameter must be an integer. And, what I need for this is experiment is a fractional (real) number and the real number sometimes needs to be less than 1 second. So, another solution was needed.

The solution I came up with was to create a situation where the blocker/holder session locked and then released a table in a very specific and controlled pattern. Then I'll have another session (the "waiter") attempting to acquire the lock.

But here's the cool part. Only for the "waiter" session, I timed the lock acquisition time (the wait time), so I know the true wait times... many of them. Then I also looked at waiter session's ASH time_waited column. Now I can compare the true wait time and the ASH sampled wait times!

This means, I have two different sets of data related to the exact same situation.

The first set is the actual lock acquisition times. I simply got the timestamp before the lock acquisition attempt and again after the session acquired the lock... calculate the delta...

The second set of data was recorded for me by ASH. I get the ASH data from the time_waited values. It is important to understand that only when a session are trying to acquire the lock and ASH samples the session would I see the session waiting on the lock.

Naturally, we would expect some difference between ASH and the actual locking situation. How much of a difference is where it really gets interesting!

The Experiment: The Lock Holder/Blocker Session

Below is the "blocker" code that I simply copy and pasted into SQL*Plus. I explain the code below the listing.

connect sys as sysdba

grant execute on dbms_lock to craig;

connect craig/craig

drop table bogusT;

create table bogusT as select * from dual;

drop table op_lock_times_blocker;

create table op_lock_times_blocker (lock_time_s number);

select distinct sid from v$mystat;

declare

sample_value_var number;

begin

dbms_random.seed(13);

loop

---- The below random statement are used to set the random number ranges

sample_value_var := dbms_random.value(0.15,0.45);

--sample_value_var := dbms_random.value(0.01,0.05);

--sample_value_var := dbms_random.value(2.0,5.0);

---- The below insert/commit data is ONLY used to ensure the locks times are correct

---- They are not used in the statistical analysis

insert into op_lock_times_blocker values (sample_value_var);

commit write nowait;

---- Lock, wait, and release for a specific time; sample_value_var

lock table bogusT in exclusive mode;

---- This is how I controlled the blocking sessions lock time

dbms_lock.sleep(sample_value_var);

---- now release the lock, using the rollback

rollback;

end loop;

end;

/

In the above code, the blocker should be holding the lock between 0.15 and 0.45 seconds. The waiter will then be blocked from 0 to a maximum of 0.45 seconds. But it's more interesting than this!

If you look closely at the dbms_random entries, you can see that I control the random value, which will be the actual blocking session's lock time. Statistically speaking, the random real numbers will be pulled from a pool of uniformly distributed numbers between and including the given low and high values. So, if the range is between 0.15 and 0.45, the average should be 0.30. I actually checked this! Thankfully, this was always correct!

The key to the session blocker code is the combination of locking the table, sleeping for a specific period of time and then releasing the lock.

So, the blocker should be holding the lock between 0.15 and 0.45 seconds, with an average lock time of 0.30 seconds. The waiter will then be blocked from 0 to a maximum of 0.45 seconds. Next I'll describe how I collected the Oracle waiting session details.

The Experiment: The Lock Acquisition/Waiting Session

The code for the session attempting to acquire the table lock is a bit more complicated. The complication lies in that I only want to use the ASH samples during the lock acquisition times. Below is the code that I copy and pasted directly into SQL*Plus.

To summarize the below code, I collect 200 lock acquisition samples. Every attempt/sample is timed.

To help ensure the locking situation is what I think it is, I also take a before and after snapshot of the session's v$session_wait lock related data. Thankfully, Oracle's wait interface agreed with the waiting/holding session's lock times and the number of locks (i.e., the count).

I also saved the current ASH sample_id just before collection begins. This allows me to report on the session's ASH data ONLY during the lock attempts.

Slowly, go through the code list below. It's full of comments, so I'm hoping it will be clear.

connect craig/craig

set tab off

set serveroutput on

drop table op_lock_times_waiter_truth;

create table op_lock_times_waiter_truth (lock_time_s number);

variable sample_id_start number;

variable sid number;

begin

select distinct sid into :sid from v$mystat;

end;

/

print :sid

declare

max_samples_var number := 90; -- sometimes I took 200 samples

max_sleep_var number := 3.14159265359;

cntr_var number;

timestamp_start_var timestamp;

wait_time_ms_var number;

total_waits_B_var number;

time_waited_s_B_var number;

total_waits_E_var number;

time_waited_s_E_var number;

begin

select max(sample_id)

into :sample_id_start

from v$active_session_history

where session_id = :sid;

begin

-- get BEGIN total wait time, BEGIN total waits

select total_waits, time_waited/100

into total_waits_B_var, time_waited_s_B_var

from v$session_event

where sid = :sid

and event like 'enq: TM%';

exception when others then

total_waits_B_var := 0;

time_waited_s_B_var := 0;

end;

for cntr_var in 1..max_samples_var

loop

-- get pre-lock time, to ensure i'm looking the right data

select current_timestamp

into timestamp_start_var

from dual;

-- attempt to lock the table, which may already be locked

-- if I acquire the lock, processing continues to next line of code

-- the 999 second timeout should never happen in this controlled experiment

lock table bogusT in exclusive mode wait 999;

-- get acquisition clock time and calculate lock time to the millisecond

select extract( day from diff )*24*60*60*1000 +

extract( hour from diff )*60*60*1000 +

extract( minute from diff )*60*1000 +

extract( second from diff )*1000

into wait_time_ms_var

from (select current_timestamp - timestamp_start_var diff

from dual

);

-- release the lock by rolling back

rollback;

-- record the actual lock time, if any

insert into op_lock_times_waiter_truth values (wait_time_ms_var/1000);

-- commit the lock time... there is a rollback in this script, so

-- i need to commit the experimental results

commit;

-- to reduce the likelihood of a cyclical pattern, randomly sleep

dbms_lock.sleep(dbms_random.value(0,max_sleep_var));

end loop;

-- get END total wait time, END total waits

select total_waits, time_waited/100

into total_waits_E_var, time_waited_s_E_var

from v$session_event

where sid = :sid

and event like 'enq: TM%';

-- get ending wait time and wait totals... calc avg wait time

dbms_output.put_line('-------------------------------------------------------');

dbms_output.put_line('Waiting session actual wait times based on v$session_event');

dbms_output.put_line('.');

dbms_output.put_line('Avg TM wait time (s) : '||

round((time_waited_s_E_var-time_waited_s_B_var)/(total_waits_E_var-total_waits_B_var),3)||

' Total TM waits : '||(total_waits_E_var-total_waits_B_var) );

dbms_output.put_line('-------------------------------------------------------');

end;

/

So... what happened? Read on!

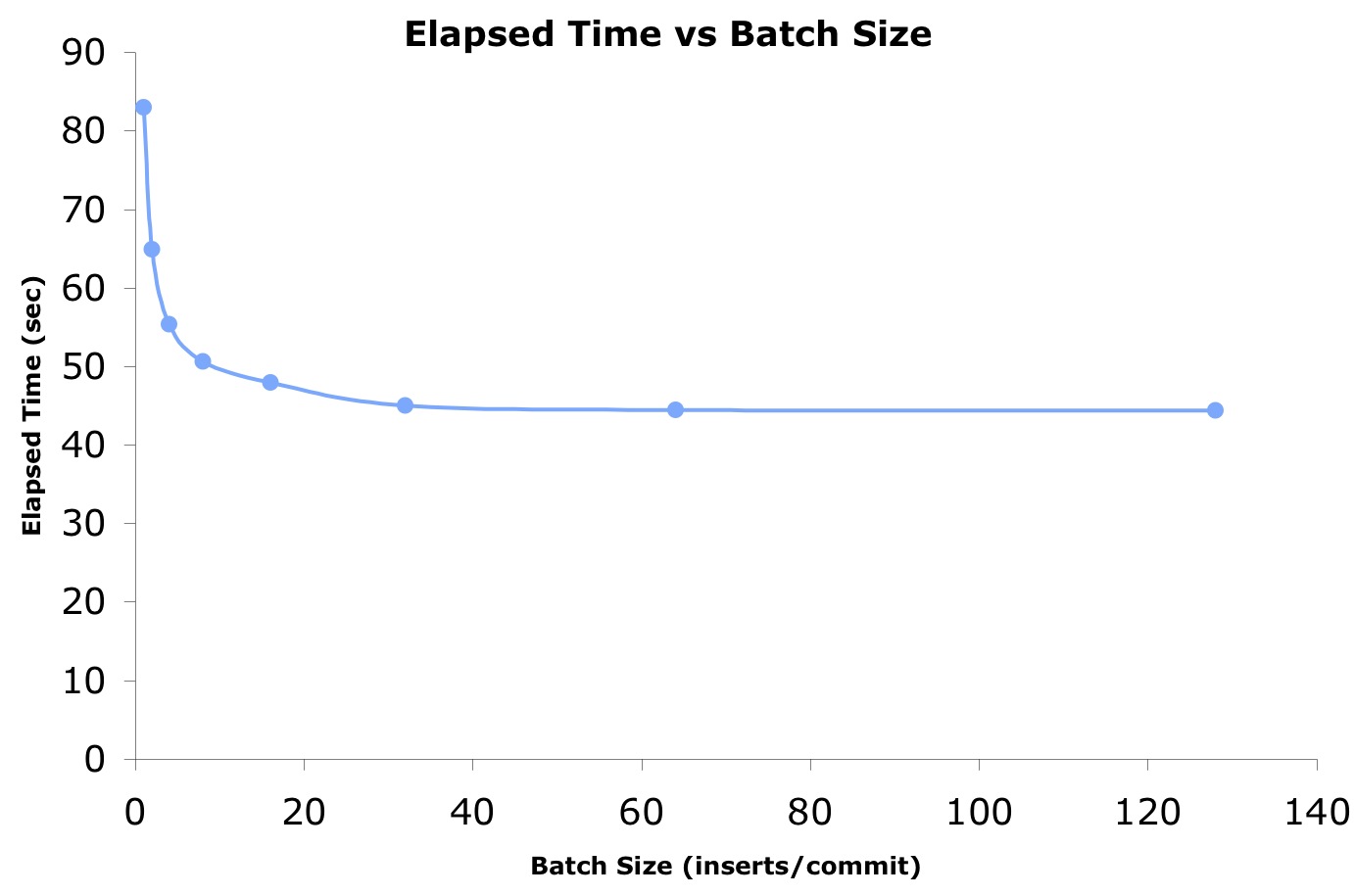

The Experiment: The Results

The experimental design worked flawlessly. I was able to get all the wait time related samples I needed. Plus, I used three different blocking session sleep times. This gave me wait times less than and greater than ASH's default one second sample period. ...exactly what I wanted.

Below is a matrix summarizing the results.

The Measured Lock Times are the waiting session's actual lock times while trying to acquire the table lock. There are always 200 samples because a) each sample is a lock acquisition attempt and b) because that is how many samples I wanted to gather, using a plsql FOR loop.

The ASH-based experimental samples times are taken from the time_waited column. Notice the number of ASH samples does not equal the number of FOR loop samples. I would NOT expect them to be the same because they are two independent activities. I'll comment more on this below.

There is a LOT to learn from this experiment. If you just want a summary, jump down to the next section. But, here are a few observations I want to highlight.

-

The quicker the lock, the fewer ASH samples collected. In fact, for the quickest lock time (median of 0.010 sec) out of the two hundred lock acquisitions, ASH only caught the session 1 time waiting! Therefore, ASH is more likely to miss quick waits. This means making time based inferences, such as the average wait time, is problematic because the time_waited column will be biased towards the high wait times.

-

The longer the wait, the more ASH samples you will capture.

-

Making average and median inferences for wait times greater then ASH's sample period (1s) using all the ASH samples is misleading. As I demonstrated in my previous post, if ASH samples a session while "in the wait" the time_waited value is zero. The zero values make average and median calculations totally useless. Take away: Do not make average and median inferences when wait times are greater than ASH's sample rate based on the time_waited column.

-

I suspect that if the wait time was ALWAYS longer than the sample rate, using only non-zero values you could calculate the average and median wait time. But if there are waits times less than ASH's sample rate (1s), as I mention above your inferences will be misleading.

Note: Very cool... If I remove the zero values from the long data set, the time_waited column value average is 2.3484 and the median is 2.2500. That is very close to the measured lock times of 2.177 and 2.160 values respectively! But remember, for this to work, ALL the waits must be greater than the ASH sample rate (1s). Otherwise, the usefulness of the average and median will quickly degrade.

-

The measured and ASH sampled MAXIMUM wait times are pretty close, if you have a bunch of samples. In my experiment, with only 38 samples, the short wait times (around 160ms) was only off by 5%. That's pretty good. And the multi-second wait time maximum wait times difference round to zero percent error! The take away: If you have lots of ASH samples one of them is likely to be close to the maximum wait time.

But be careful, making decisions based on a maximum value is problematic. All it takes is some bizarre unlikely scenario to cause a one-off massive wait time. Just because a value is correct, doesn't mean you should use in your decision making. If would be better to do some math and take, for example, the 98%-tile value. That way you can "scrape" off the extremely unlikely and worthless maximum values. I'll detail how to do that in my next post.

Wow... That was a lot to digest, so I have summarized it below.

Result (Super) Summary

Based on my experimental data,

- Pretty good maximum wait time values can be determined from the ASH time_waited column. With more than 30+ samples, you will probably have a pretty good high-end wait time during the sampling period. Be aware that maximum values are usually not all that helpful.

- Calculating the average and median wait times from the ASH time_waited column can easily be misleading. Don't do it unless the wait times are nearly always greater than the ASH sample rate of 1s... and then only after removing the time_waited zero values.

All the best to you in your work,

Craig.