You know it is important to test your trained machine learning model with data it has never seen before. But as I discussed in my previous post, Top Down Strategy To Split Your Full Dataset this results in an invalid test.

So once again, the question is, "How should I split the FULL dataset into a TRAIN and TEST dataset?" The question seems so simple... but it's not. How the split is performed can make all the difference towards achieving a valid and useful model accuracy score.

In this post, I discuss the next logical step, which is to randomize the data before the split. But does this result in a trustworthy and robust accuracy analysis?

That's what this post is all about.

Random (Shuffle) Split: Thinking About It

Many years ago, I figured out testing a model with unseen data is important but the simple "top down split" approach produced invalid accuracy score results. So, I started to think more about this.

Once I understood the problem centered around the data being sorted, I decided to shuffle the data, then apply the "top down split" strategy. This essentially provided me with random row selections for both my TRAIN and TEST datasets.

At that time in my career, I used MS Excel. A lot! So, I simply added a new column and each row cell contained a random number. Then I sorted the data by the random number cell. And finally, I used the top portion for my TRAIN data and the bottom for my TEST data.

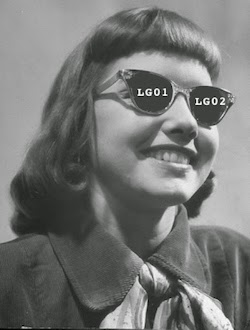

Below is an example. Notice the rows are not in Snap ID order. The green rows would be my TRAIN data and the yellow rows my TEST data.

Interestingly, if you look above closely, you'll notice the random number column rows are not in sorted order... that's because the cells recalculated after I did the sort.

Random (Shuffle) Split: Python

In Python it is easy to split the FULL dataset into TRAIN and TEST datasets based on pure random sampling.

You can download the Jupyter Notebook containing the Python code HERE. If you need to setup your machine learning sandbox environment, click HERE

If you look at the code below, the function train_test_split is being used. Here is a description of the INPUT parameters:

-

X is the same 1259 feature rows I used above.

-

y is the same 1259 label rows I used above.

-

test_size is set to 20%, which will result in a TEST dataset containing 20% of the full dataset and the remaining 80% will be the TRAIN dataset.

-

stratify is set to None indicating pure random row sampling is to be used. More about this parameter in my next post.

-

random_state is set to ensure I can re-run the split with the same exact results. I chose 6752 for no particular reason.

# Trained model is tested with data it has never seen before... good

# Train/Test split was performed randomly... good

# No attempt to split based on label proportions... bad

# So, the results could be better or worse than a simple top-down split. Therefore, weak test.

print("Split FULL Dataset Into TRAIN And TEST Datasets Using A Random Shuffle")

print()

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.20, stratify=None, random_state=6752)

ScoreAndStats(X, y, X_train, y_train, X_test, y_test)

Shown below are the shapes of the datasets after the randomized split. I labeled the datasets so they are easily identified.

Split FULL Dataset Into TRAIN And TEST Datasets Using A Random Shuffle

Shapes X(r,c) y(r,c)

Full (1259, 3) (1259,)

Train (1007, 3) (1007,)

Test (252, 3) (252,)

When the model is trained and then tested, the TEST data accuracy score is 0.77. Better than our 0.65 result using the simple Top Down Strategy shown in the previous post. However, this is still not a great score.

But honestly, the score is not all that important because this is a weak data split strategy! But why? Read on...

Random (Shuffle) Split: Summary

Ten years ago, my predictive work focused on answering questions like, "Can the system handle the load six months from now?" Back then, the randomized split strategy worked great.

But times have changed! Now I focus on questions like, "Did the system experience an anomalous performance event over the past 30 minutes?" or perhaps, "Will support tickets come flooding in over the next 30 minutes?"

The questions I answer now are very different than 10 years ago. And, I now use more and different data for my analysis.

This is why it was pure luck the above randomized split strategy score of 0.77 was better than simple top down split strategy score 0.65. The random sample strategy score could have been worse or better or the same. The score difference can be explained by the row order.

While it's always better to randomly split the FULL dataset, it is still not a good solution. Sorry for the bad news.

What Do I Do Now?

At this point, both the "top down split" and the "random shuffle split" strategies will produce untrustworthy accuracy score results. If you're like me you're asking, "Gee thanks Craig! But what do I do now?"

There is more to this situation than I presented. What still remains is a very significant problem that we must deal with. This is a problem that does NOT affect many datasets.

But, as Oracle Professionals who usually focus on database activity and performance metrics, we find ourself dealing with very infrequent yet very important events... poor performance or highly unusual performance.

The data split strategies I've presented so far and the accuracy scoring methods are insufficient to deal with our typical situations. In my next post, I will present yet another and better way to split our FULL dataset into a TRAIN and TEST dataset.

All the best in your machine learning work,

Craig.